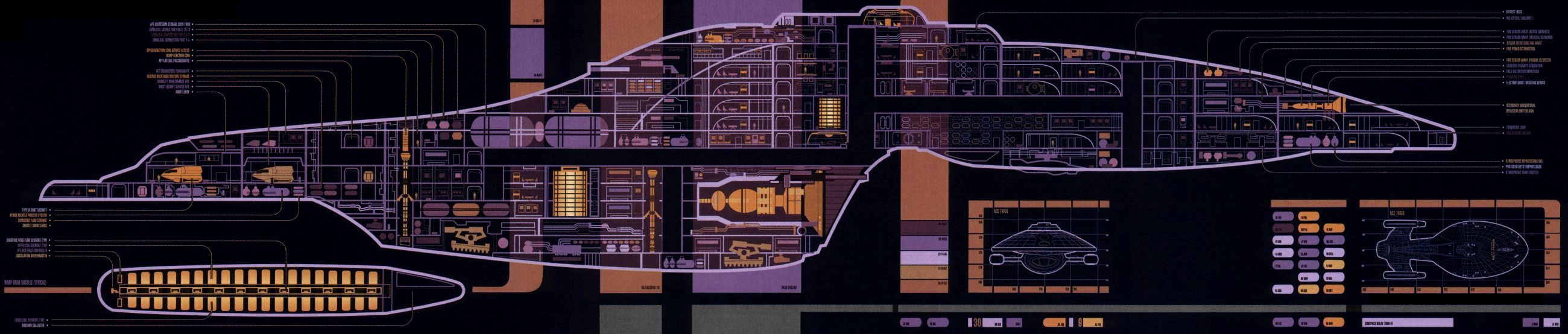

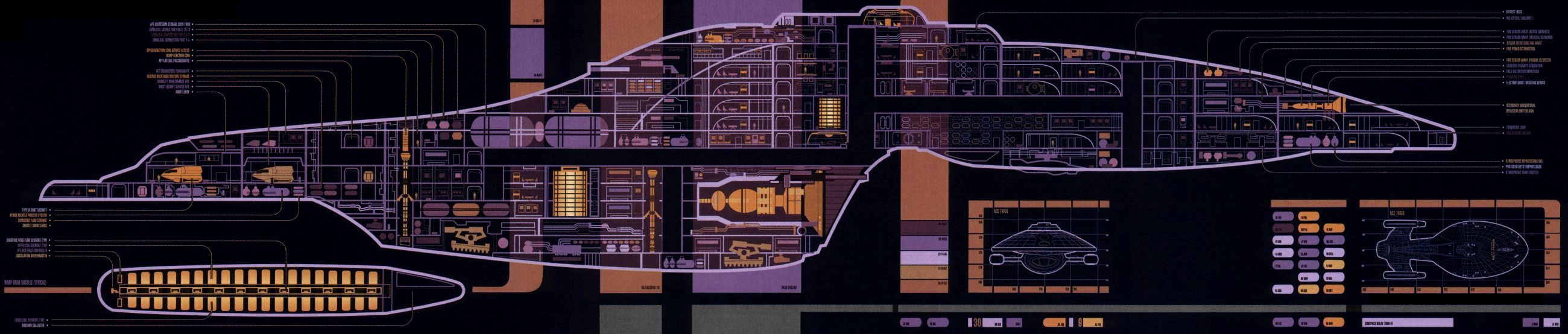

One thing I have always liked about the Engineering section from Star Trek: The Next Generation was the Master Systems Display. This large display, which was found on the ship’s bridge in later series, contained a cutaway of the ship that showed a detailed overview of the operational status of the ship’s various systems.

The Master Systems display from Star Trek Voyager. Linked from Memory Alpha.

Although the Master Systems Display is fictional, the idea of having one place to look to get the current status of all your systems can be very appealing. This is especially true in a multi-user or multi-tenant environment where you need to quickly identify the systems and/or processes that are not performing optimally. And this information needs to be displayed in a way that makes it easy to understand while providing administrators with a way to dig deeper if they need to.

Some tools with these functions already exist in the network space. They can give a nice graphical layout of the network, and they can show how heavily a link is being utilized by changing the color based on bandwidth utilization. PHP Weathermap is FOSS example of a product in this space.

ControlUp 4.1 is the latest version of a similar tool in the systems management space. Although it doesn’t provide a graphical map of where my systems might be residing, it provides a nice, easy to read list of my systems and a grid with their current status, a number of important metrics that are monitored, and the ability to dive down deeper to view child items such as virtual machines in a cluster or running processes on a Windows OS.

The ControlUp Management Console showing a host in my home lab with all VMs on that host. The Stress Level column and color-coding of potential problems make it easy to identify trouble spots. Servers that can’t run the agent won’t pull all stats.

So why make the comparison to the Master Systems Display from Star Trek? If you look in the screenshot above, not only do I see my ESXi host stats, but I can quickly see that host’s VMs on the same screen. I can see where my trouble spots are and how it contributes to the overall health of the system it is running on.

What Is ControlUp?

ControlUp is a monitoring and management platform designed primarily for multi-user environments. It was originally designed to monitor RDSH and XenApp environments, and over the years it has been extended to include generic Windows servers, vSphere, and now Horizon View.

ControlUp is an agent-based monitoring system for Windows, and the agent is an extremely lightweight application that can be installed permanently as a Windows Service or be configured to be uninstalled when an admin user closes the administrative console. ControlUp also has a watchdog service that can be installed on a server that will collect metrics and handle alerting should all running instances of a console be closed.

One component that you will notice is missing from the ControlUp requirements list is a database of any sort. ControlUp does not use a database to store historical metrics, nor is this information stored out “in the cloud.” This design decision is a double-edged sword – it makes it very easy to set up a monitoring environment, but viewing historical data and trending based on past usage aren’t integrated into the product in the same way that they are in other monitoring platforms.

That’s not to say that these capabilities don’t exist in the product. They do – but it is in a completely different manner. ControlUp does allow for scheduled exports of monitoring data to the file system, and these exported files can be consumed by a trending analysis component. There are pros and cons to this approach, but I don’t want to spend too much time on this particular design choice as it would detract from the benefits of the program.

What I will say is this, though – ControlUp provides a great immediate view of the status of your systems, and it can supplement any other monitoring system out there. The other system can handle long-term historical, trending, analysis, etc, and ControlUp can handle the immediate picture.

How It Works

As I mentioned above, ControlUp is an agent-based monitoring package. That agent can be pushed to the monitored system from the management console or downloaded and installed manually. I needed to take both approaches at times as a few of my servers would not take a push installation. That seems to have gotten better with the more recent editions.

The ControlUp Agent polls a number of different metrics from the host or virtual machine – everything from CPU and RAM usage to the per-session details and processes for each logged-in user. This also includes any service accounts that might be running services on the host.

If your machines are VMs on vSphere, you can configure ControlUp to connect to vCenter to pull statistics. It will match up the statistics that are taken from inside the VM with those taken from vCenter and present them side-by-side, so administrators will be able to see the Windows CPU usage stats, the vCenter CPU usage stats, and the CPU Ready stats next to each other when trying to troubleshoot an issue.

Grid showing active user and service account, number of computers that they’re logged into, and system resources that the accounts are utilizing.

For VDI and RDSH-based end-user environments, ControlUp will also track session statistics. This includes everything from how much CPU and RAM the user is consuming in the session to how long it took them to log in and the client they’re connecting from. In Horizon environments, this will include a breakdown of how long it took each part of the user profile to load.

Grid showing a user session with the load times of the various profile components and other information.

The statistics that are collected are used to calculate a “stress level.” This shows how hard the system is working, and it will highlight the statistics that should be watched closer or are dangerously high. Any statistics that are moderately high or in the warning zone will show up in the grid as yellow, and anything that is dangerously high will be colored red. This combination gives administrators a quick summary of the machine’s health and uses color effectively to call out the statistics that help give it the health warning that the machine has received.

Not only can I see that the 1st server may have some performance issues, but the color coding immediately calls out why. In this case – the server is utilizing over 80% of its available RAM.

Configuration Management

One other nice feature of ControlUp is that it can do some configuration comparison and management. Say I have a group of eight application servers, and they all run the same application. If I need to deploy a registry key, or change a service from disabled to automatic, I would normally need to use PowerShell, Group Policy, and/or manually touch all eight servers in order to make the change.

The ControlUp Management Console allows an administrator to compare settings on a group of servers – such as a registry key – and then make that change across the entire group in one batch.

In my lab, I don’t have much of a use for this feature. However, I can definitely see the use case for it in large environments where there are multiple servers serving the same role within the environment. It can also be helpful for administrators who don’t know PowerShell or have to make changes across multiple versions of Windows where PowerShell may not be present.

Conclusion

As I stated in my opening, I liken ControlUp to that master systems display. I think that this system gives a good picture of the immediate health of an environment, but it also provides enough tools to drill down to identify issues.

Due to how it handles historical data and trending, I think that ControlUp needs to be used in conjunction with another monitoring system. I don’t think that should dissuade anyone from looking at it, though, as the operational visibility benefits outweigh having to implement a second system.

If you want more information on ControlUp, you can find it on their website at http://www.controlup.com/

![clip_image001[10] clip_image001[10]](https://thevirtualhorizon.com/wp-content/uploads/2015/06/clip_image00110_thumb.png?w=244&h=137)

You must be logged in to post a comment.