And we’re back…this week with the final part of deploying a Horizon 2006 environment – deploying the Unified Access Gateway to enable remote access to desktops.

Before we go into the deployment process, let’s dive into the background on the appliance.

The Unified Access Gateway (also abbreviated as UAG) is a purpose built virtual appliance that is designed to be the remote access component for VMware Horizon and Workspace One. The appliance is hardened for deployment in a DMZ scenario, and it is designed to only pass authorized traffic from authenticated users into a secure network.

As of Horizon 2006, the UAG is the primary remote access component for Horizon. This wasn’t always the case – previous Horizon releases the Horizon Security Server. The Security Server was a Windows Server running a stripped-down version of the Horizon Connection Server, and this component was deprecated and removed with Horizon 2006.

The UAG has some benefits over the Security Server. First, it does not require a Windows license. The UAG is built on Photon, VMware’s lightweight Linux distribution, and it is distributed as an appliance. Second, the UAG is not tightly coupled to a connection server, so you can use a load balancer between the UAG and the Connection Server to eliminate single points of failure.

And finally, multifactor authentication is validated on the UAG in the DMZ. When multi-factor authentication is enabled, users are prompted for that second factor first, and they are only prompted for their Active Directory credentials if this authentication is successful. The UAG can utilize multiple forms of MFA, including RSA, RADIUS, and SAML-based solutions, and setting up MFA on the UAG does not require any changes to the connection servers.

There have also been a couple of 3rd-party options that could be used with Horizon. I won’t be covering any of the other options in this post.

If you want to learn more about the Unified Access Gateway, including a deeper dive on its capabilities, sizing, and deployment architectures, please check out the Unified Access Gateway Architecture guide on VMware Techzone.

Deploying the Unified Access Gateway

There are two main ways to deploy the UAG. The first is a manual deployment where the UAG’s OVA file is manually deployed through vCenter, and then the appliance is configured through the built-in Admin interface. The second option is the PowerShell deployment method, where a PowerShell script and OVFTool are used to automatically deploy the OVA file, and the appliance’s configuration is injected from an INI file during deployment.

Typically, I prefer using the PowerShell deployment method. This method consists of a PowerShell Deployment Script and an INI file that contains the configuration for each appliance that you’re deploying. I like the PowerShell script over deploying the appliance through vCenter because the appliance is ready to use on first boot. It also allows administrators to track all configurations in a source control system such as Github, which provides both documentation for the configuration and change tracking. This method makes it easy to redeploy or upgrade the Unified Access Gateway because I rerun the script with my config file and the new OVA file.

The PowerShell script requires the OVF Tool to be installed on the server or desktop where the PowerShell script will be executed. The latest version of the OVF Tool can be downloaded from MyVMware. PowerCLI is not required when deploying the UAG as OVF Tool will be deploying the appliance and injecting the configuration.

The zip file that contains the PowerShell scripts includes sample templates for different use cases. This includes Horizon use cases with RADIUS and RSA-based multifactor authentication. You can also find the reference guide for all options here.

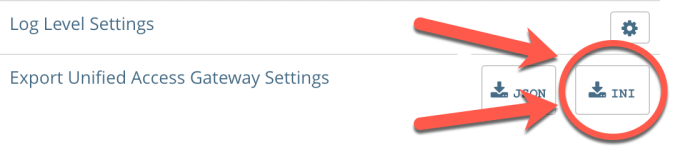

If you haven’t deployed a UAG before, are implementing a new feature on the UAG, or you’re not comfortable creating the INI configuration file from scratch, then you can use the manual deployment method to configure your appliance and then export the configuration in the INI file format that the PowerShell deployment method can consume. This exported configuration only contains the appliance’s Workspace ONE or Horizon configuration – you would still have to add in your vSphere and SSL Certificate configuration.

You can export the configuration from the UAG admin interface. It is the last item in the Support Settings section.

One other thing that can trip people up when creating their first UAG deployment file is the deployment path used by OVFTool. This is not always straightforward, and vCenter has some “hidden” objects that need to be included in the path. OVFTool can be used to discover the path where the appliance will be deployed.

You can use OVFTool to connect to your vCenter with a partial path, and then view the objects in that location. It may require multiple connection attempts with OVFTool to build out the path. You can see an example of this over at the VMwareArena blog on how to export a VM with OVFTool or in question 8 in the troubleshooting section of the Using PowerShell to Deploy the Unified Access Gateway guide.

Before deploying the UAG, we need to get some prerequisites in place. These are:

- Download the Unified Access Gateway OVA file, PowerShell deployment script zip file, and the latest version of OVFTool from MyVMware.

- Right click on the PowerShell zip file and select Properties.

- Click Unblock. This step is required because the file was downloaded from the Internet, and is untrusted by default, and this can prevent the scripts from executing after we unzip them.

- Extract the contents of the downloaded ZIP file to a folder on the system where the deployment script will be run. The ZIP file contains multiple files, but we will only be using the uagdeploy.ps1 script file and the uagdeploy.psm1 module file. The other scripts are used to deploy the UAG to Hyper-V, Azure, and AWS EC2.The zip file will also contain a number of default templates. When deploying the access points for Horizon, I recommend starting with the UAG2-Advanced.ini template. This template provides the most options for configuring Horizon remote access and networking. Once you have the UAG deployed successfully, I recommend copying the relevant portions of the SecurID or RADIUS auth templates into your working AP template. This allows you to test remote access and your DMZ networking and routing before adding in MFA.

- Before we start filling out the template for our first access point, there are some things we’ll need to do to ensure a successful deployment. These steps are:

- Ensure that the OVF Tool is installed on your deployment machine.

- Locate the UAG’s OVA file and record the full file path. The OVA file can be placed on a network share.

- We will need a copy of the certificate, including any intermediate and root CA certificates, and the private key in PFX or PEM format. Place these files into a folder on the local or network folder and record the full path.If you are using PEM files, the certificate files should be concatenated so that the certificate and any CA certificates in the chain are in one file, and the private key should not have a password on it. If you are using PFX files, you will be prompted for a password when deploying the UAG.

- We need to create the path to the vSphere resources that OVF Tool will use when deploying the appliance. This path looks like: vi://user@PASSWORD:vcenter.fqdn.orIP/DataCenter Name/host/Host or Cluster Name/OVF Tool is case sensitive, so make sure that the datacenter name and host or cluster names are entered as they are displayed in vCenter.

The uppercase PASSWORD in the OVFTool string is a variable that prompts the user for a password before deploying the appliance. If you are automating your deployment, you can replace this with the password for the service account that will be used for deploying the UAG.

Note: I don’t recommend saving the service account password in the INI files. If you plan to do this, remember best practices around saving passwords in plaintext files and ensure that your service account only has the required permissions for deploying the UAG appliances.

- Generate the passwords that you will use for the appliance Root and Admin passwords.

- Get the SSL Thumbprint for the certificate on your Connection Server or load balancer that is in front of the connection servers.

- Fill out the template file. The file has comments for documentation, so it should be pretty easy to fill out. You will need to have a valid port group for all three networks, even if you are only using the OneNic deployment option.

- Save your INI file as <UAGName>.ini in the same directory as the deployment scripts.

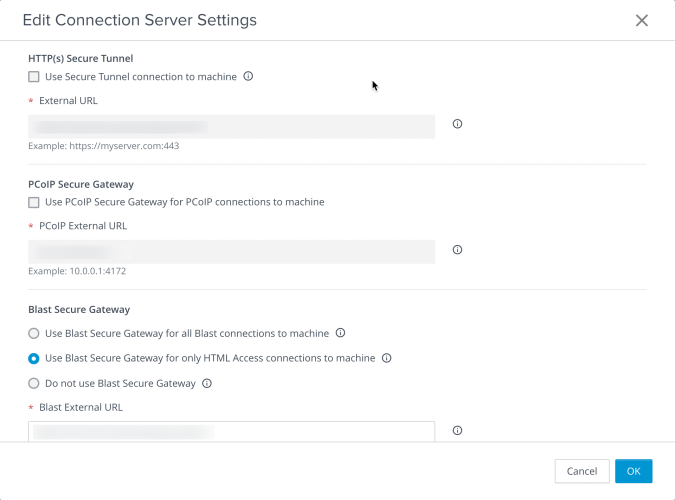

There is one change that we will need to configure on our Connection Servers before we deploy the UAGs – disabling the Blast and PCoIP secure gateways. If these are not disabled, the UAG will attempt to tunnel the user protocol session traffic through the Connection Server, and users will get a black screen instead of a desktop.

The steps for disabling the gateways are:

- Log into your Connection Server admin interface.

- Go to Settings -> Servers -> Connection Servers

- Select your Connection Server and then click Edit.

- Uncheck the following options:

- Use Secure Tunnel to Connect to machine

- Use PCoIP Secure Gateway for PCoIP connections to machine

- Under Blast Secure Gateway, select Use Blast Secure Gateway for only HTML Access connections to machine. This option may reduce the number of certificate prompts that users receive if using the HTML5 client to access their desktop.

- Click OK.

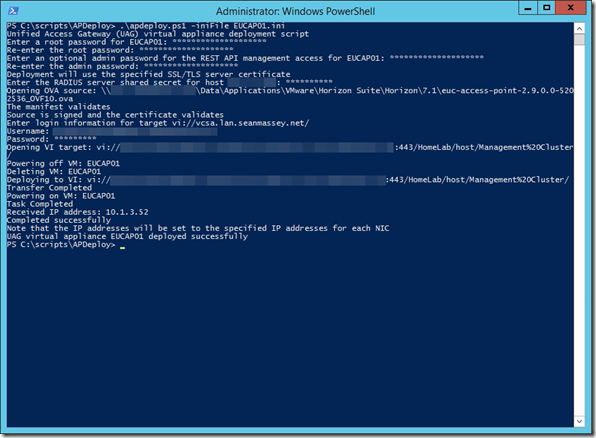

Once all of these tasks are done, we can start deploying the UAGs. The steps are:

- Open PowerShell and change to the directory where the deployment scripts are stored.

- Run the deployment script. The syntax is .\UAGDeploy.ps1 –inifile <apname>.ini

- Enter the appliance root password twice.

- Enter the admin password twice. This password is optional, however, if one is not configured, the REST API and Admin interface will not be available.

Note: The UAG Deploy script has parameters for the root and admin passwords. These can be used to reduce the number of prompts after running the script. - If RADIUS is configured in the INI file, you will be prompted for the RADIUS shared secret.

- After the script opens the OVA and validates the manifest, it will prompt you for the password for accessing vCenter. Enter it here.

- If a UAG with the same name is already deployed, it will be powered off and deleted.

- The appliance OVA will be deployed. When the deployment is complete, the appliance will be powered on and get an IP address from DHCP.

- The appliance configuration defined in the INI file will be injected into the appliance and applied during the bootup. It may take a few minutes for configuration to be completed.

Testing the Unified Access Gateway

Once the appliance has finished it’s deployment and self-configuration, it needs to be tested to ensure that it is operating properly. The best way that I’ve found for doing this is to use a mobile device, such as a smartphone or cellular-enabled tablet, to access the environment using the Horizon mobile app. If everything is working properly, you should be prompted to sign in, and desktop pool connections should be successful.

If you are not able to sign in, or you can sign in but not connect to a desktop pool, the first thing to check is your firewall rules. Validate that TCP and UDP ports 443, 8443 and 4172 are open between the Internet and your Unified Access Gateway. You may also want to check your Connection Server configuration and ensure that HTTP Secure Gateway, PCoIP Secure Gateway, and Blast Secure Gateway are disabled.

If you’re deploying your UAGs with multiple NICs and your desktops live in a different subnet than your UAGs and/or your Connection Servers, you may need to statically define routes. The UAG typically has the default route set on the Internet or external interface, so it may not have routes to the desktop subnets unless they are statically defined. An example of a route configuration may look like the following:

routes1 = 192.168.2.0/24 192.168.1.1,192.168.3.0/24 192.168.1.1

If you need to make a routing change, the best way to handle it is to update the ini file and then redeploy the appliance.

Once deployed and tested, your Horizon infrastructure is configured, and you’re ready to start having users connect to the environment.

You must be logged in to post a comment.